Introduction

In my last post, I introduced a simple Natural Language Processing (NLP) problem: extracting mentions of programming languages from the Wikipedia article on programming languages, using both a rules-based technique and a machine learning technique. In this post, I’ll talk about how I would improve the models used in each technique.

Improving the rules-based model

Improving a rules-based model requires an expert in writing rules. The expert designs rules to cover as most of the problem space as possible.

In the last post, I used a single rule. I scanned the text for sentences that contained the word ‘language’, removed the first word, and declared any other capitalized words in those sentences to be programming languages. This rule attained approximately 53% accuracy, but had several shortcomings:

- The rule returned plenty of ‘false positives’ – mentions that were NOT programming languages, including people and corporation names

- Programming languages were missed entirely when the sentence did not contain the word ‘language’

- Non-capitalized languages were not detected

- The rule did not detect languages with multi-word names

I’ve already discussed in my Cognitive Systems Testing posts that 100% accuracy is generally impossible in a cognitive or NLP-based system, but we can definitely do better than 53%. Let’s first break down the types of errors we had.

- Precision errors, also called ‘false positives’. Think of these as finding a result you shouldn’t have found. Problem 1 is a precision error.

- Recall errors – also called ‘false negatives’. Think of these as not finding a result that you should have found. Problems 2 and 3 are recall errors.

- Wrong answer errors. Think of these as results that incur both a precision and recall penalty. Problem 4 is a wrong answer error.

Generally speaking, fixing precision errors means being more conservative in your annotations and fixing recall errors means being more liberal. There is a delicate dance to improve both competing concerns. With each new rule, the rules developer must maximize a gain in one concern while minimizing loss in the other. And, the developer must write rules with the highest bang-for-the-buck.

Problems 1 and 2 encompass the vast majority of the errors. Problem 3 affects a handful of languages and Problem 4 affects one, so let us focus our efforts on the first two problems.

Here are additional rules I would try:

First new rule, detect capitalized words in sentences with additional ‘trigger’ words. A trigger word is a word that indicates nearby word(s) are significant. I would try a expanding the list of trigger words to include ‘programming’ and ‘syntax’ in addition to ‘language’. This should improve recall.

Second new rule, detect capitalized words that are playing the role of people or corporations. Ideally, I could find another annotator that does this, since detecting people and corporations is a common problem. If not, I could build a list of trigger words that mean the next capitalization is not significant: “invented by”, “developed by”, “programmed by” are triggers that mean the next capital word is probably not a programming language.

I would test out the results of these new rules before adding any others, to make sure these rules actually worked. Achieving accuracy with a rules-based system is an iterative process, and at some point I will reach diminishing returns with every incremental rule added.

Aside from these rule improvements, I would now start using a real NLP development workbench like IBM Watson Explorer, which has better primitives and capabilities for developing rules quickly and evaluating their results.

Improving the machine-learning-based model

This section is much easier to write. With a purely machine learning-based model, you only have one thing you do can do: change the training data.

Generally, this means adding new ground truth by bringing in additional documents and annotating them by hand. However, there are cases where you might want to remove some training data if your training data is not a representative sampling of what you will actually run your model against. For instance, if you train on Wikipedia pages but test against Internet blogs, you will be disappointed in your model’s performance. Thus when adding new training documents be sure you are getting a representative mix, and when you are uploading document segments be sure to send use some beginning, middle, and ending segments. Variation is key.

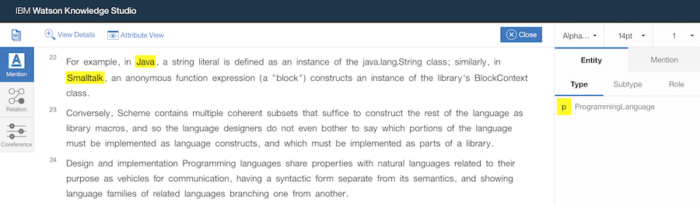

When you add new documents to your training set, you can use your existing machine-learning model to “pre-annotate” those documents. This reduces the amount of work you the human annotator have to do, since you now only need to correct mistakes the model made. When the model has a precision error (false positive), you simply undo the annotation. You still have to scan the whole document to fix recall errors (false negatives), since the model didn’t annotate something it should have. And you can do this process iteratively: train a model, collect/pre-annotate new ground truth, train the model again, collect/pre-annotate more new ground truth, etc, until your desired accuracy is attained.

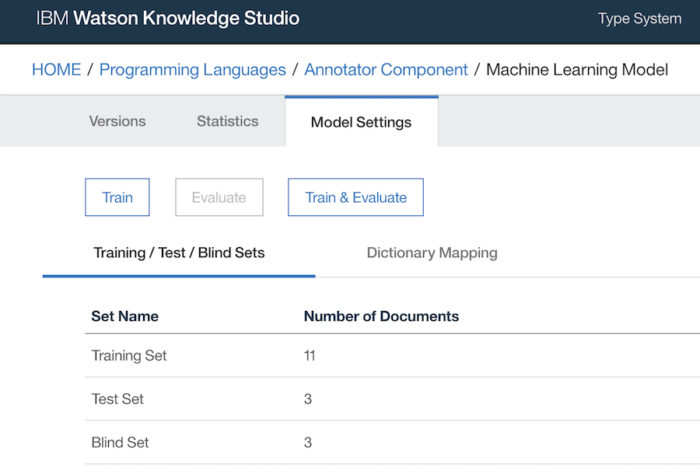

Since I developed a model entirely from one Wikipedia article, and only trained the model against ~70% of that article, I clearly need to add more training data. I would improve this model by adding more documents about computer programming: blog posts, journal articles, and other technical sources.

See video demonstration of how I added new training documents and used my existing model to pre-annotate them:

Conclusion

In this post I talked about how I would specifically fix the very simple NLP models I produced with both rules-based and machine-learning-based techniques. In my next post I will generically discuss the pros and cons of both approaches.

Very informative. I would like to ask that is improving a rule means optimizing it? I mean is rule optimization and improvement are similar concepts?

*are rule optimization and improvement similar concepts?

Yes, I would call them similar.