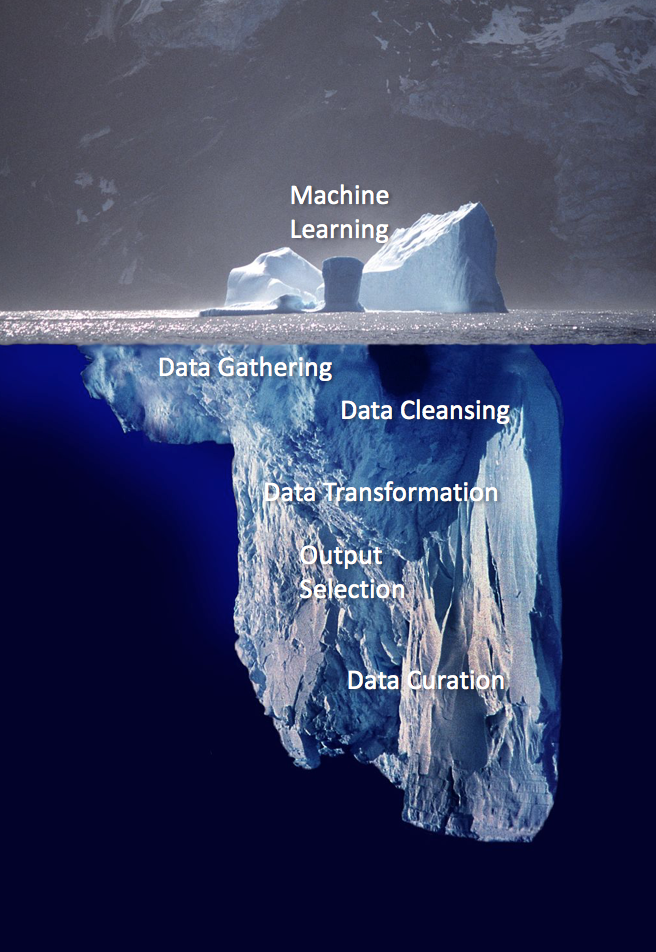

Machine Learning. Such a cool name, and such a powerful technology. But there’s a lot of work required on your part if you want to successfully apply it to your problems. Beware of these 5 tasks that may take longer than you expect.

1. Data gathering (source documents)

You need to gather a lot of data for a successful machine learning exercise. (Why does machine learning require so much data?). This data may live across disparate document stores, email clients, chat logs, databases, spreadsheets, etc. Finding all the source documents that contain the data you will need takes time.

2. Data cleansing and normalization

Depending on machine learning algorithm choice, your data may need to be strongly typed. If you have a numeric data field with values of “30”, “50”, and “about 75”, machine learning can’t do anything with the mixture of “about” and “75”. If your data has a lot of holes (ex: blank cells in Excel), this severely curtails what you can do until you fill them in. If you have an enumerated list of two types, let’s say “basic” or “advanced”, and you have values including “basic”, “advanced”, “ADVANCED”, “really advanced”, then you really have four types unless you normalize down to your original two. This could require simple find-and-replace (hours) or extensive manual review (days/weeks).

3. Data transformation

Depending on your data format, you may have to transform it for machine learning. For some conversions you have off the shelf tools – Watson’s Document Conversion Service does a handful, for instance going from HTML to plain text. You may need to build shell scripts (hours of effort) or full-fledged ETL processes to convert your data (weeks or months). There is a major difference between human-readable documents and computer-readable documents, especially in complex PDF structures.

4. Output selection

You need to define the target for most machine learning algorithms. If you want it to classify text into groups, what are the target groups? If you want it to extract entities from your text, what are the types of entities you want to extract? What is the dependent variable you want to predict? And once you define the output, you have to get everyone to agree on it!

5. Data curation

Once you extract your raw data and define the outputs, you need to map all of your inputs. If you are classifying documents into several types, then you need to sort your documents into these types. If you are doing question and answer systems, you need to put together a bunch of question/answer pairs.

What comes next? Iteration!

These steps cannot just be applied in a waterfall process. In all likelihood, as you build a machine learning model you will need to adjust some or all of the previous steps as you see what works well and what doesn’t. (See my other post for When can you stop iterating?) As you adjust one step you may need to repeat/adjust the other steps.

Conclusion

A successful machine learning exercise requires a lot of preparation work. It is not uncommon to spend 90% of your time on this preparation work and only 10% on the modeling – your mileage will vary depending on your data and the problem you are trying to solve. Each of these steps could take days, weeks, or months. Skimping these steps is penny-wise and pound-foolish, leading to bad results. It may not be glamorous or exciting, but this hidden work is required for success.