Machine learning models learn by example. They need to be trained on a data representative of what they will be asked to make predictions against. Collecting the necessary volume and variety of training data can be time consuming. Let’s drop in on a conversation between a machine learning adopter and a machine learning sage.

“We don’t have time to collect training data. Can we shortcut or eliminate this process?”

No.

You need to find out how users actually want to use the system and find examples of the data they will throw against it. If you’re building an email classifier, you need real emails. If you are building a complaint analyzer, you need real complaints. If you are building a chatbot, you need real chat logs.

“That sounds hard. That kind of data isn’t available, it will take too long to collect, we don’t want to bug our end-users to provide this kind of data. I thought AI and machine learning were smart. Do we really have to collect this kind of data?”

Yes.

At first denial sets in. Thoughts swirl: “We don’t have this data.” “We shouldn’t have to provide this data.” As in the stages of grief, denial gives way to anger. “We thought this was going to be easy.” Then anger gives way to bargaining. “What if we fabricate the training data? After all, we know how the end-users will use this tool and what kind of data they will throw against it. In no time at all we can generate lots of simulated training data. Will that be enough?”

No *.

“Hey, you caveated that last one. We’re saved!”

Simulating training data merely postpones your training problem. The size of the problem is directly proportional to the difference between your fabricated training data and the real data your end-users will eventually provide. As described in my “Machine Learning in Space” analogy, non-representative data is space junk that hurts your model.

“That’s great, I know exactly the kinds of data that will be thrown at this model. Thanks for this get out of jail free card!”

Designers have been touting User Research for ages for a very good reason. Nobody knows their end users as well as they think they do. You may feel you’re different but the odds are not in your favor. Simulated training data can be used to bootstrap an initial model but simulated training data should be replaced with real and representative data as soon as possible. You should add time for an iteration cycle or two to your development plan, otherwise you have a significant risk. (Anna Chaney has a great AI Rules of Engagement post you should read including how to replace your simulated data.) The gap between what data you think the model will see and what data the model actually will see is larger than you think. This gap is directly proportional to the size of your future problem.

“Ok, that caveat spooked me. Can I reduce the size of this risk?”

Yes!

There are lots of ways to gather realistic training data for a system. Sometimes you already have the data and it’s just a matter of collating it, through visiting application logs, chat logs, emails, or a bunch of documents sitting on someone’s hard drive. Sometimes you can build a mock interface. I often train chatbots with a simple UI inviting users to ask a question of a new chatbot, when they type and hit submit this goes straight into a training data bucket. Or build a *tiny* MVP. When you examine the data coming out of this kind of exercise you’ll see some things you expected to see but also some surprises. These surprises are exactly what you would not have trained your model to handle.

“Thanks, machine learning guru!”

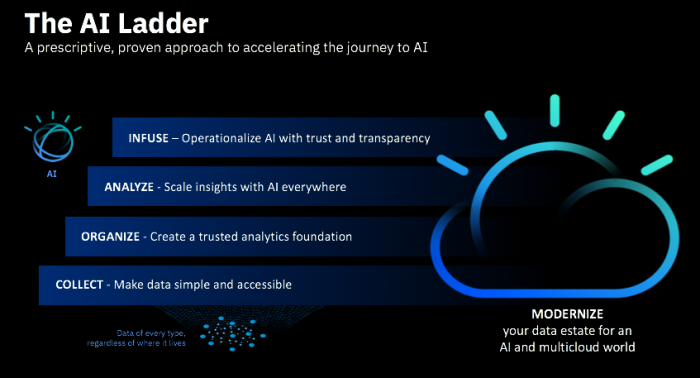

As popularized in the AI Ladder, a successful machine learning or AI project has four key steps. Collecting and organizing data for use by the model forms a solid foundation and without this foundation AI cannot succeed. Data collection and organization is not fun, not nearly as much fun as building a model, but it is a critical step and cannot be shortchanged. If you skip or skimp these steps up front you will end up revisiting them later!